You may by no means have listened to the expression “synthetic media”— far more frequently known as “deepfakes”— but our armed service, regulation enforcement and intelligence organizations surely have. They are hyper-real looking video clip and audio recordings that use synthetic intelligence and “deep” discovering to make “fake” content or “deepfakes.” The U.S. federal government has developed progressively concerned about their probable to be used to spread disinformation and commit crimes. That is mainly because the creators of deepfakes have the electric power to make men and women say or do something, at least on our screens. Most People have no concept how significantly the know-how has occur in just the very last 4 years or the threat, disruption and possibilities that appear with it.

Deepfake Tom Cruise: You know I do all my own stunts, clearly. I also do my personal audio.

Chris Ume/Metaphysic

This is not Tom Cruise. It truly is one of a sequence of hyper-realistic deepfakes of the motion picture star that began showing up on the video clip-sharing app TikTok previously this year.

Deepfake Tom Cruise: Hey, what is up TikTok?

For days individuals puzzled if they were being authentic, and if not, who had created them.

Deepfake Tom Cruise: It’s essential.

Lastly, a modest, 32-year-previous Belgian visual effects artist named Chris Umé, stepped forward to claim credit history.

Chris Umé: We considered as prolonged as we’re making obvious this is a parody, we’re not undertaking everything to damage his image. But right after a number of movies, we recognized like, this is blowing up we’re receiving thousands and thousands and hundreds of thousands and thousands and thousands of sights.

Umé states his perform is created less complicated mainly because he teamed up with a Tom Cruise impersonator whose voice, gestures and hair are approximately similar to the actual McCoy. Umé only deepfakes Cruise’s face and stitches that on to the authentic movie and seem of the impersonator.

Deepfake Tom Cruise: That’s in which the magic transpires.

For technophiles, DeepTomCruise was a tipping stage for deepfakes.

Deepfake Tom Cruise: However acquired it.

Monthly bill Whitaker: How do you make this so seamless?

Chris Umé: It begins with coaching a deepfake design, of system. I have all the encounter angles of Tom Cruise, all the expressions, all the emotions. It requires time to produce a truly very good deepfake design.

Bill Whitaker: What do you imply “education the product?” How do you coach your personal computer?

Chris Umé: “Schooling” indicates it truly is going to review all the pictures of Tom Cruise, all his expressions, in contrast to my impersonator. So the computer’s gonna train by itself: When my impersonator is smiling, I’m gonna recreate Tom Cruise smiling, and which is, that is how you “educate” it.

Chris Ume/Metaphysic

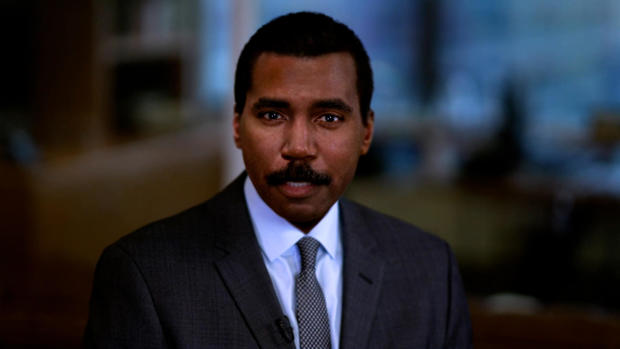

Applying video from the CBS Information archives, Chris Umé was able to educate his laptop or computer to learn each facet of my experience, and wipe absent the decades. This is how I seemed 30 decades back. He can even clear away my mustache. The possibilities are infinite and a minor terrifying.

Chris Umé: I see a large amount of problems in my operate. But I really don’t brain it, basically, for the reason that I you should not want to idiot people today. I just want to clearly show them what is possible.

Invoice Whitaker: You will not want to idiot people today.

Chris Umé: No. I want to entertain individuals, I want to elevate recognition, and I want

and I want to exhibit where by it truly is all heading.

Nina Schick: It is without a doubt just one of the most essential revolutions in the foreseeable future of human communication and notion. I would say it is analogous to the start of the world wide web.

Political scientist and know-how guide Nina Schick wrote 1 of the initially guides on deepfakes. She initial arrived across them 4 years ago when she was advising European politicians on Russia’s use of disinformation and social media to interfere in democratic elections.

Invoice Whitaker: What was your response when you first realized this was possible and was heading on?

Nina Schick: Properly, given that I was coming at it from the standpoint of disinformation and manipulation in the context of elections, the point that AI can now be made use of to make images and video that are bogus, that appear hyper real looking. I considered, nicely, from a disinformation viewpoint, this is a video game-changer.

So far, there is no evidence deepfakes have “modified the recreation” in a U.S. election, but previously this calendar year the FBI place out a notification warning that “Russian [and] Chinese… actors are applying synthetic profile images” — creating deepfake journalists and media personalities to unfold anti-American propaganda on social media.

The U.S. armed forces, law enforcement and intelligence businesses have kept a cautious eye on deepfakes for several years. At a 2019 listening to, Senator Ben Sasse of Nebraska questioned if the U.S. is geared up for the onslaught of disinformation, fakery and fraud.

Ben Sasse: When you assume about the catastrophic likely to general public belief and to markets that could come from deepfake assaults, are we organized in a way that we could probably answer quick more than enough?

Dan Coats: We evidently require to be more agile. It poses a major danger to the United States and anything that the intelligence group requires to be restructured to deal with.

Since then, engineering has continued transferring at an exponential rate even though U.S. plan has not. Efforts by the governing administration and huge tech to detect synthetic media are competing with a neighborhood of “deepfake artists” who share their latest creations and tactics on-line.

Like the web, the initially position deepfake technological know-how took off was in pornography. The unfortunate fact is the vast majority of deepfakes currently consist of women’s faces, mostly celebs, superimposed onto pornographic films.

Nina Schick: The initially use circumstance in pornography is just a harbinger of how deepfakes can be utilised maliciously in lots of distinct contexts, which are now starting up to occur.

Bill Whitaker: And they are acquiring greater all the time?

Nina Schick: Sure. The incredible issue about deepfakes and synthetic media is the pace of acceleration when it comes to the technological innovation. And by 5 to 7 years, we are mainly searching at a trajectory where any single creator, so a YouTuber, a TikToker, will be ready to create the identical amount of visible outcomes that is only available to the most well-resourced Hollywood studio right now.

Chris Ume/Metaphysic

The technology behind deepfakes is artificial intelligence, which mimics the way human beings learn. In 2014, researchers for the very first time used personal computers to generate reasonable-wanting faces applying a little something called “generative adversarial networks,” or GANs.

Nina Schick: So you established up an adversarial video game the place you have two AIs combating each and every other to try and make the ideal fake synthetic content. And as these two networks combat every single other, just one attempting to generate the finest impression, the other attempting to detect wherever it could be greater, you essentially end up with an output that is progressively improving upon all the time.

Schick says the energy of generative adversarial networks is on full exhibit at a web-site named “ThisPersonDoesNotExist.com”

Nina Schick: Every single time you refresh the site, there is a new image of a individual who does not exist.

Each is a one particular-of-a-type, entirely AI-produced image of a human currently being who hardly ever has, and hardly ever will, wander this Earth.

Nina Schick: You can see each and every pore on their confront. You can see each and every hair on their head. But now picture that technology remaining expanded out not only to human faces, in even now photographs, but also to video, to audio synthesis of people’s voices and that’s truly where we are heading ideal now.

Bill Whitaker: This is intellect-blowing.

Nina Schick: Yes. [Laughs]

Monthly bill Whitaker: What’s the beneficial facet of this?

Nina Schick: The technologies alone is neutral. So just as poor actors are, devoid of a question, likely to be applying deepfakes, it is also going to be used by good actors. So 1st of all, I would say that there is certainly a incredibly powerful circumstance to be built for the business use of deepfakes.

Victor Riparbelli is CEO and co-founder of Synthesia, based in London, a person of dozens of organizations utilizing deepfake technology to rework online video and audio productions.

Victor Riparbelli: The way Synthesia works is that we have primarily changed cameras with code, and at the time you’re working with program, we do a lotta things that you wouldn’t be able to do with a typical camera. We are still incredibly early. But this is gonna be a elementary transform in how we generate media.

Synthesia will make and sells “digital avatars,” using the faces of compensated actors to provide customized messages in 64 languages… and makes it possible for company CEOs to tackle staff abroad.

Snoop Dogg: Did someone say, Just Take in?

Synthesia has also assisted entertainers like Snoop Dogg go forth and multiply. This elaborate Tv professional for European meals shipping company Just Take in price a fortune.

Snoop Dogg: J-U-S-T-E-A-T-…

Victor Riparbelli: Just Try to eat has a subsidiary in Australia, which is known as Menulog. So what we did with our technologies was we switched out the phrase Just Eat for Menulog.

Snoop Dogg: M-E-N-U-L-O-G… Did somebody say, “MenuLog?”

Victor Riparbelli: And all of a sudden they experienced a localized model for the Australian sector without having Snoop Dogg owning to do anything at all.

Monthly bill Whitaker: So he can make two times the funds, huh?

Victor Riparbelli: Yeah.

All it took was 8 minutes of me reading a script on digital camera for Synthesia to create my artificial speaking head, comprehensive with my gestures, head and mouth movements. Yet another company, Descript, utilized AI to make a synthetic edition of my voice, with my cadence, tenor and syncopation.

Deepfake Monthly bill Whitaker: This is the final result. The text you happen to be listening to have been by no means spoken by the serious Bill into a microphone or to a digicam. He simply typed the text into a laptop and they come out of my mouth.

It may possibly seem and seem a minimal rough about the edges right now, but as the know-how enhances, the opportunities of spinning words and visuals out of skinny air are endless.

Deepfake Bill Whitaker: I am Monthly bill Whitaker. I am Monthly bill Whitaker. I am Bill Whitaker.

Monthly bill Whitaker: Wow. And the head, the eyebrows, the mouth, the way it moves.

Victor Riparbelli: It is all synthetic.

Bill Whitaker: I could be lounging at the seaside. And say, “Folks– you know, I am not gonna come in currently. But you can use my avatar to do the operate.”

Victor Riparbelli: It’s possible in a handful of decades.

Bill Whitaker: Never tell me that. I might be tempted.

Tom Graham: I believe it will have a massive effects.

The quick advancements in synthetic media have brought on a virtual gold hurry. Tom Graham, a London-based mostly law firm who built his fortune in cryptocurrency, lately begun a company called Metaphysic with none other than Chris Umé, creator of DeepTomCruise. Their intention: develop software package to enable everyone to generate hollywood-caliber flicks with out lights, cameras, or even actors.

Tom Graham: As the hardware scales and as the types develop into a lot more productive, we can scale up the dimension of that product to be an entire Tom Cruise overall body, motion and everything.

Invoice Whitaker: Perfectly, converse about disruptive. I imply, are you gonna place actors out of work opportunities?

Tom Graham: I feel it is a good point if you’re a nicely-regarded actor now for the reason that you may perhaps be in a position to allow anyone collect information for you to make a version of yourself in the foreseeable future the place you could be performing in videos soon after you have deceased. Or you could be the director, directing your younger self in a film or one thing like that.

If you are wondering how all of this is legal, most deepfakes are regarded as protected no cost speech. Makes an attempt at laws are all around the map. In New York, commercial use of a performer’s artificial likeness without the need of consent is banned for 40 many years soon after their death. California and Texas prohibit misleading political deepfakes in the guide-up to an election.

Nina Schick: There are so quite a few moral, philosophical grey zones in this article that we seriously require to consider about.

Monthly bill Whitaker: So how do we as a culture grapple with this?

Nina Schick: Just being familiar with what’s likely on. Since a good deal of people continue to will not know what a deepfake is, what synthetic media is, that this is now attainable. The counter to that is, how do we inoculate ourselves and comprehend that this variety of information is coming and exists with out remaining entirely cynical? Ideal? How do we do it without shedding trust in all authentic media?

Which is heading to demand all of us to figure out how to maneuver in a planet exactly where viewing is not always believing.

Manufactured by Graham Messick and Jack Weingart. Broadcast affiliate, Emilio Almonte. Edited by Richard Buddenhagen.

Obtain our Free of charge Application

For Breaking News & Examination Download the Free CBS Information application